Using Terraform, Ansible and GitHub Actions to automate provisioning and configuration of workloads in the homelab

Around six months ago I converted all of the internal cluster workloads in k3s from manually installed Helm releases to ArgoCD applications. This has made upgrading everything within the cluster a breeze. I’ve thought about doing the same for the VMs used as cluster nodes since then. Now seemed like a good time.

I already use Terraform for provisioning VMs in Proxmox, with Ansible for installing and configuring the cluster. All of this is ran from a local machine. The task is therefore to automate these steps from another machine using pipelines.

In a perfect scenario I would have some tool with access to the Proxmox API which could spawn clusters on-demand and do all of the lifecycle management. There are a couple of options for other hypervisors, but I haven’t found anything for Proxmox at the time of this writing.

Enter GitHub Actions and self-hosted runners.

Scope

Since I’m using repository runners, spinning up new runners has to be an easy process that scales without the need for manual steps.

The criteria I have:

- Provisioning and configuring runners should be done using IaC and workflows

- The initial runner is the only runner which should be provisioned and configured manually

- A runner connected to a repository should be able to do the following in a workflow:

- Use Terraform to provision new VMs in Proxmox

- Use Ansible to configure VMs

Architecture

High-level

graph TB

subgraph github_actions_runner_spawner_vm[VM: GitHub Actions Runner Spawner]

github_actions_runner_spawner[Runner: GitHub Actions Runner Spawner]

repo_1_n_runner[Container: Repository 1..N Runner]

end

subgraph github[GitHub.com]

github_actions_self_hosted_runners[GitHub Actions Self Hosted Runners Repository]

repo_1_n[Repository 1..N]

end

github_actions_runner_spawner-->|Connects to repository|github_actions_self_hosted_runners

github_actions_runner_spawner-->|Spawns|repo_1_n_runner

repo_1_n_runner-->|Connects to repository|repo_1_n

tf_runner_repo[GitHub Actions Runner Spawner Terraform Repository]-->|Manually provisions|github_actions_runner_spawner_vm

ansible_runner_repo[GitHub Actions Runner Spawner Ansible Repository]-->|Manually configures|github_actions_runner_spawner_vm

In simple terms: GitHub Actions Runner Spawner is just a runner installed directly on a VM

to spawn other runners as containers on the same host. The runners in containers are connected to other

repositories holding either Terraform config or Ansible playbooks.

Network

graph TB

gha_vlan[VLAN - GitHub Actions Runners - 10.0.7.0/24]

gha_vlan-->|Port 443|proxmox_vlan[VLAN - Proxmox - 10.0.2.0/24]

gha_vlan-->|Port 22|k3s_vlan[VLAN - k3s - 10.0.3.0/24]

The firewall rules gives access to other VLANs, more specifically the Proxmox API and SSH port of the k3s cluster nodes.

k3s provisioning

graph TB

subgraph github_actions_runner_spawner_vm[VM: GitHub Actions Runner Spawner]

k3s_tf_repo_runner[Container: k3s Terraform Repository Runner]

k3s_ansible_repo_runner[Container: k3s Ansible Repository Runner]

end

subgraph github[GitHub.com]

tf_repo[k3s Terraform Repository]

ansible_repo[k3s Ansible Repository]

end

subgraph proxmox_vlan[Proxmox VLAN]

pve_hosts[Proxmox hosts]

end

subgraph k3s_vlan[k3s VLAN]

k3s_hosts[k3s hosts]

end

tf_repo-->|Delegate workflow|k3s_tf_repo_runner

ansible_repo-->|Delegate workflow|k3s_ansible_repo_runner

k3s_tf_repo_runner-->|Access|pve_hosts

k3s_ansible_repo_runner-->|Configure|k3s_hosts

pve_hosts-->|Provision|k3s_hosts

Both k3s repositories have a runner connected to them.

Implementation

The repository GitHub Actions Self Hosted Runners Repository does the following:

- Builds and uploads container images for runners to GitHub Packages

- Spins up new runners as containers on

GitHub Actions Runner Spawnerusing docker compose

The workflow for spinning up new runners:

.github/workflows/spawn_runners.yaml:

name: "Spawn runners"

on:

push:

branches: [main]

paths: ['.github/workflows/spawn_runners.yaml', 'docker-compose.yaml']

workflow_dispatch:

env:

REGISTRY: ghcr.io

jobs:

spawn_runners:

# Delegate the job to the self hosted runner

# running on VM `GitHub Actions Runner Spawner`

runs-on: [self-hosted]

name: Spawn runners

steps:

- uses: actions/checkout@v4

# Login to the container image registry

- name: Login to the image registry

uses: docker/login-action@65b78e6e13532edd9afa3aa52ac7964289d1a9c1

with:

registry: ${{ env.REGISTRY }}

username: ${{ github.actor }}

password: ${{ secrets.GITHUB_TOKEN }}

# Spawn runners as containers

- name: Spawn runners in containers

env:

# Fine-Grained Personal Access Token with

# access to the repositories

PAT: ${{ secrets.SELF_HOSTED_RUNNERS_HOMELAB_PAT }}

run: docker compose up -d

docker-compose.yaml:

services:

# Runner connected to the repository with the

# k3s terraform config

k3s_terraform_runner:

container_name: k3s-terraform-runner

image: ghcr.io/fredrickb/github-actions-self-hosted-runners:2.0.0

restart: always

environment:

- URL=<URL of repository holding k3s terraform config>

- NAME=k3s-terraform-runner

- PAT=${PAT}

# Runner connected to the repository with the

# k3s ansible playbook

k3s_ansible_runner:

container_name: k3s-ansible-runner

image: ghcr.io/fredrickb/github-actions-self-hosted-runners:2.0.0

restart: always

environment:

- URL=<URL of repository holding k3s ansible playbook>

- NAME=k3s-ansible-runner

- PAT=${PAT}

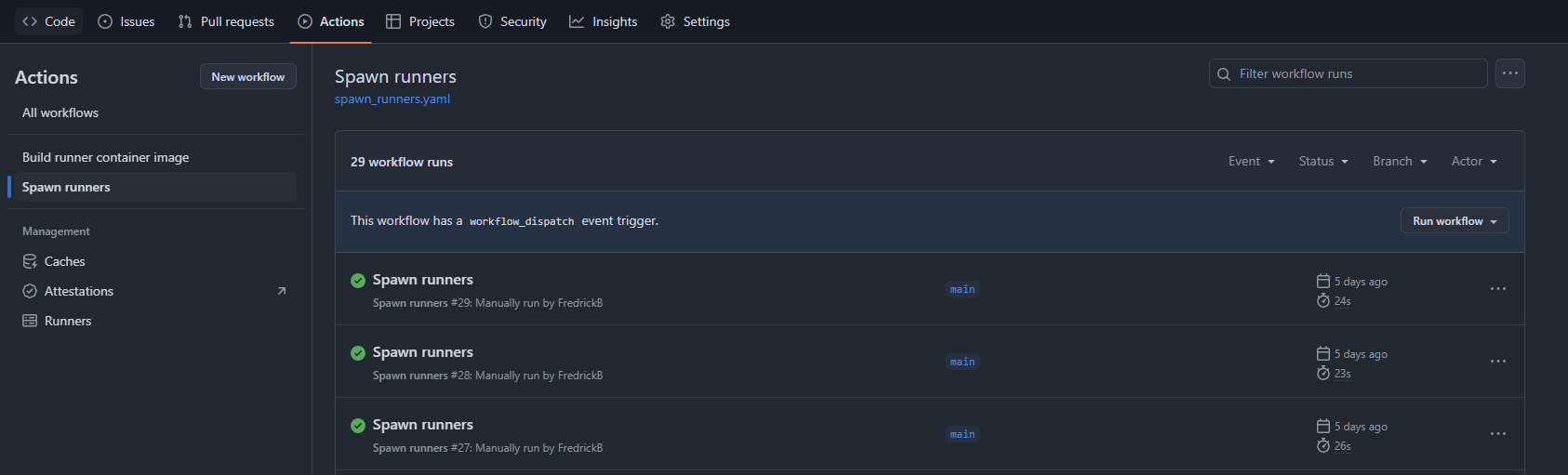

Running the workflow:

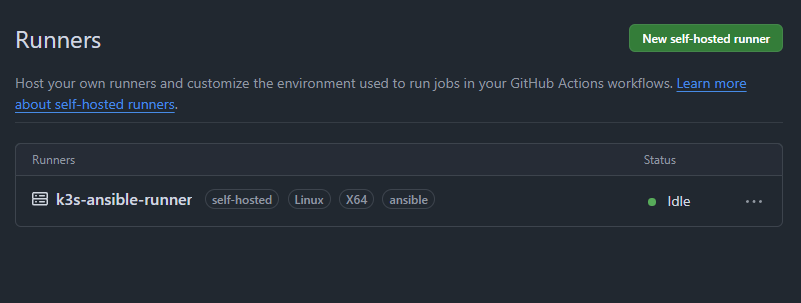

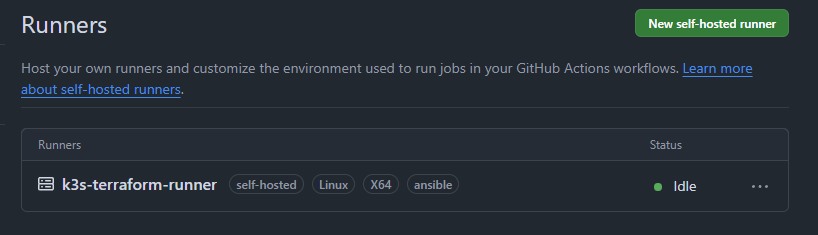

The runners then register to the repositories:

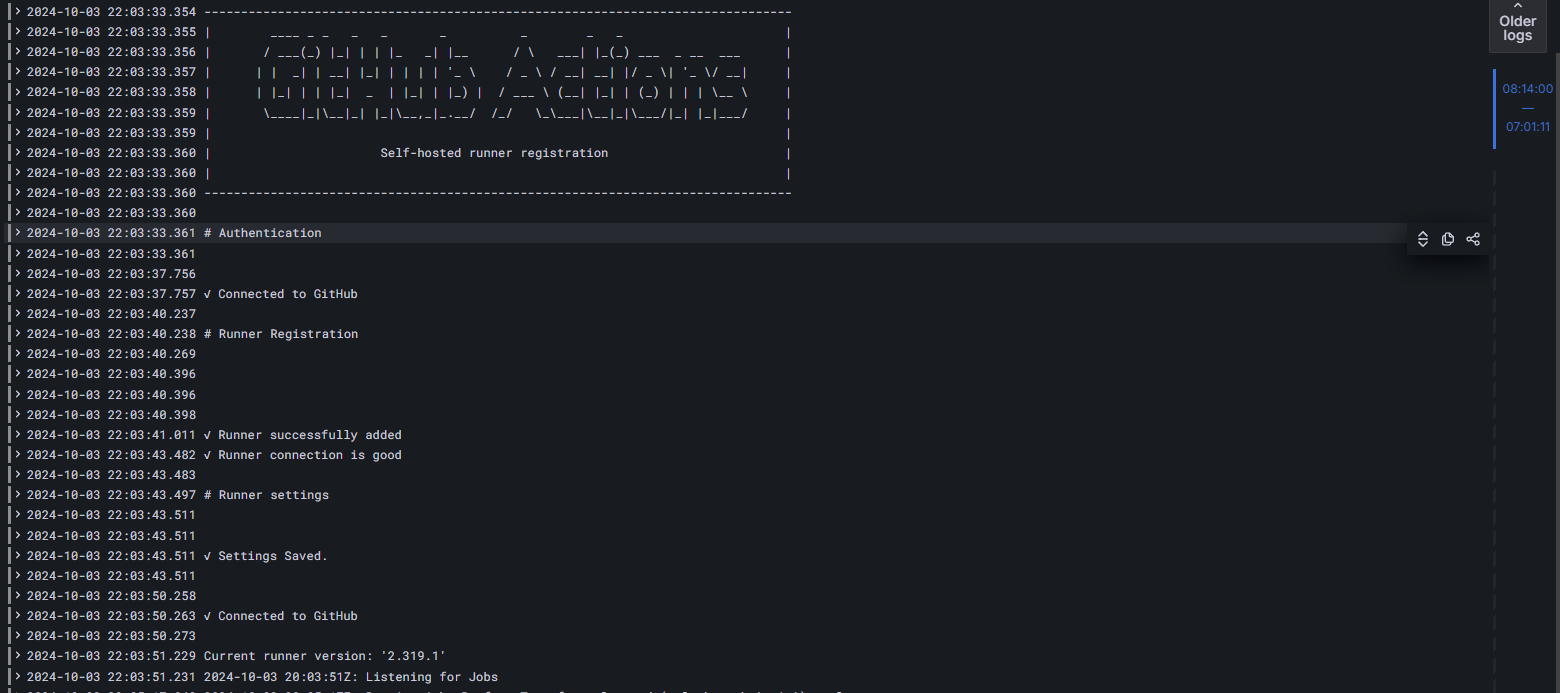

Using the journald logging driver with Promtail, Loki and Grafana provides insight

into the logs of the runners:

Adding additional runners boils down to:

- Including more repositories in the Fine-Grained Personal Access Token

- Extending

docker-compose.yamlwith more containers - Run the workflow

Everything is defined in code and automated.

Using runners for deploying k3s cluster

Setting up k3s can now be done using workflows.

Provision k3s nodes using Terraform and workflow

Snippet from workflow:

name: Terraform workflow

on:

pull_request:

branches: [main]

push:

branches: [main]

workflow_dispatch:

jobs:

plan:

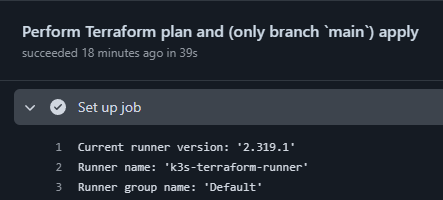

name: Perform Terraform plan and (only branch `main`) apply

runs-on: [self-hosted]

steps:

...

Workflow assigned to runner:

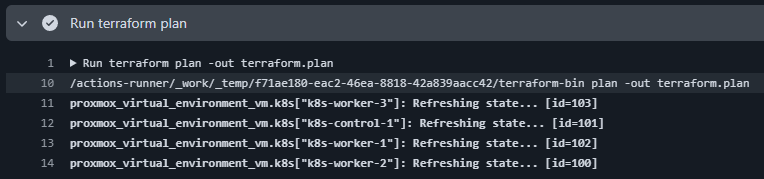

Terraform plan being run:

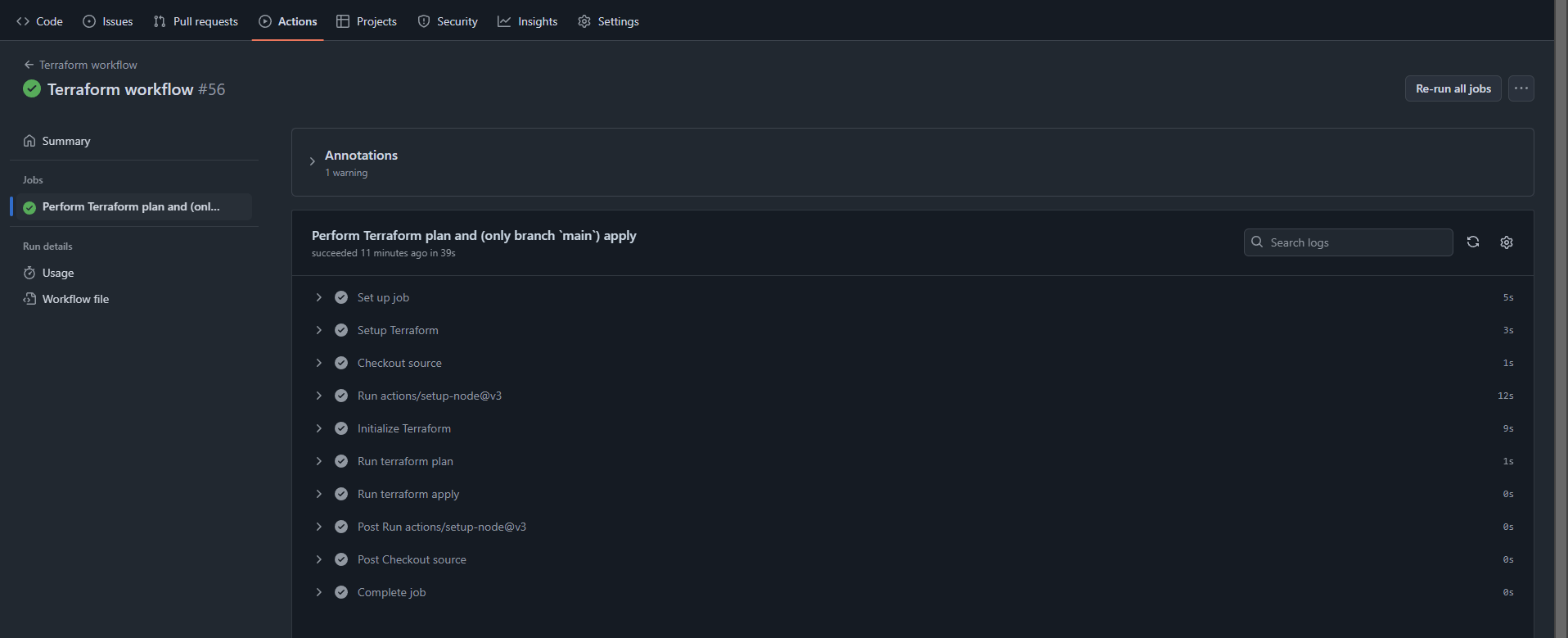

Workflow summary:

Install and configure k3s cluster using Ansible and workflow

Snippet from workflow:

name: Run Ansible playbook

on:

push:

branches: [main]

workflow_dispatch:

jobs:

run_playbook:

name: Run playbook

runs-on: [self-hosted, ansible]

steps:

...

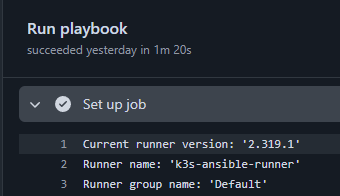

Workflow assigned to runner:

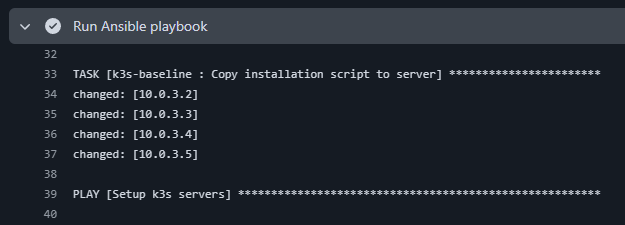

Ansible playbook running:

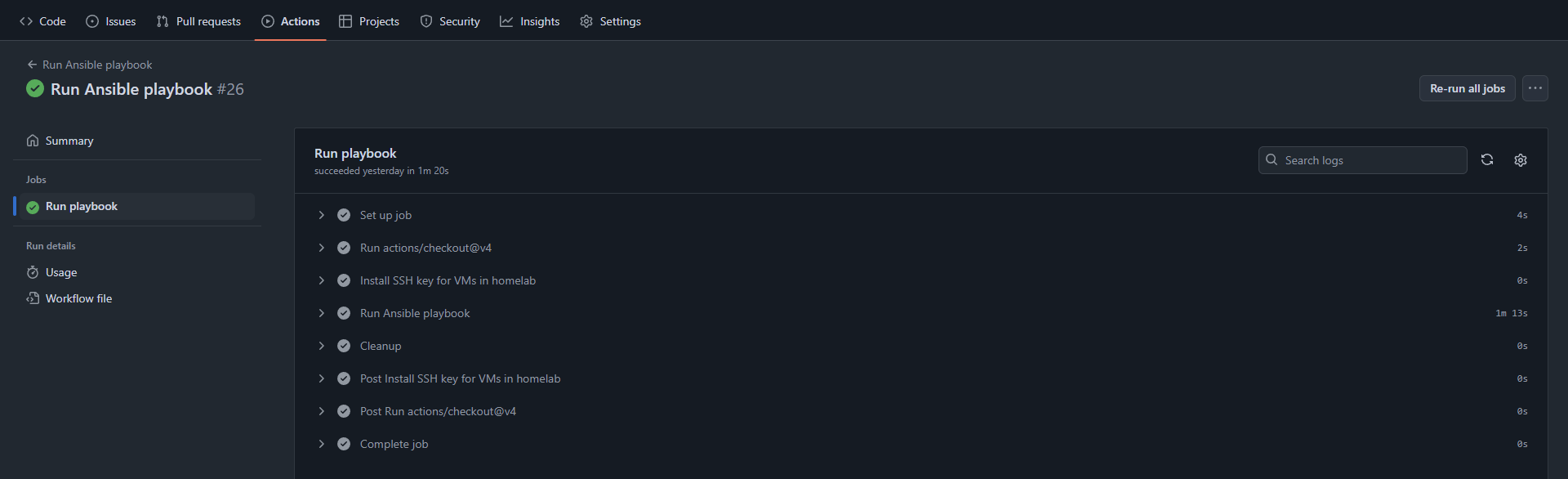

Workflow summary:

Summary

I’m at the point where I can almost bootstrap the entire cluster using IaC and automation:

| Order | Task | Method |

|---|---|---|

| 1 | Provision VMs using Terraform | Automated using workflow |

| 2 | Configure VMs using Ansible | Automated using workflow |

| 3 | Install ArgoCD | Manual step |

| 4 | Configure internal cluster workloads | Automated using ArgoCD |

| 5 | Restore PVCs from Longhorn offsite backups | Manual step |

Overall I’m pleased with the results. Changing the cluster configuration can be done using pull requests and workflows. The setup also scales for future workloads.